Why is image classification of guns so difficult for AI software?

New published research shows a Convolutional Neural Network and Support Vector Machine model has a 95% accuracy rate for correctly classifying images as firearms.

Using technology to detect guns at schools has turned into a billion dollar industry. Last week a new peer-reviewed paper was published—Advanced Computational Approaches to Gun Detection with CNN-SVM Model—exploring how to use the most advanced AI models available to classify images as firearms:

This study tries to marry Convolutional Neural Networks (CNN) and Support Vector Machine (SVN) techniques to form a very advanced gun identification system. The system's unique feature is that it is designed to see five categories of weapons. This was done by carefully evaluating the validity of the model using various performance parameters tailored according to individual classes, which demonstrated the ability of the model to withstand and its precise nature.

Our model performed interestingly, with a total system accuracy value of 94.9185%. The results highlight the accuracy achieved in perceiving guns in these CNN-SVM hybrid models and suggest that such advanced systems may have further benefits for the protection and security of the general population.

Our study's robust methodology and impeccable weapon detection achieve optimal results, making it one of the first research trends in weapons detection technology.

A big difference between commercial school security vendors versus an academic paper is the details about the accuracy of the model are published. The best software available right now has 95% accuracy. It’s likely that “AI powered” security equipment and software being used at schools right now is performing below the 95% accuracy reported in this paper. Products on the market now used older machine learning techniques like supervised learning with labeled datasets (the 2010s approach to early AI).

Is 95%—getting it wrong 5 out of 100 times—an acceptable threshold to make life safety decisions such as sending police officers racing to a campus for an active shooter that’s a false alarm, or inversely deciding that a real gun is not a threat? AI weapons scanners being used at schools right now are reported to have between a 5%-60% false alarm rate because the AI software cannot distinguish between the shape of a laptop versus a weapon.

How does AI software work?

As I explained in my CNN article, with school security, we want certainty. Is the person on CCTV holding a gun? We expect a “yes” or “no” answer. The problem is AI models provide “maybe” answers.

This is because AI models are based on probability.

For AI classifying images as a weapon, an algorithm compares each new image to the patterns of weapons in training data. AI doesn’t know what a gun is because a computer program doesn’t know what anything is. When an AI model is shown millions of pictures of guns, the model will try to find that shape and pattern in future images. It’s up to the software vendor to decide the probability threshold between a gun and not a gun.

This is a messy process. An umbrella could score 90% while a handgun that’s partially obscured by clothing might only be 60%. Do you want to avoid a false alarm for every umbrella, or get an alert for every handgun?

Inside the black box

What’s really going on inside the black box of machine learning software that classified objects in images?

Computer vision doesn’t see objects the way that humans do because software is based on math. Each pixel in the image is given a numeric value and then the program creates probability values that the proximity of clusters of pixels in training images are the same as a cluster of pixels in a new image.

While it sounds complicated, there are open-source tools that can analyze how deep learning algorithms work and what they are “seeing” in the images.

Without any special training, an existing image classification model can recognize attributes of a toy gun that looks like an AR-15 and the model assigns probabilities that the toy is different types of firearms.

With a picture of a real handgun, the open source model can classify this handgun as a firearm but isn’t really sure if it’s a revolver (incorrect), assault rifle (incorrect), or rifle (incorrect). But the type of gun doesn’t really matter if you are just trying to detect a threat. The software’s confusion about what the object is does become a problem if you are trying to determine the difference between a toy gel blaster versus a real gun.

Because a computer can’t see like a person, to understand how a model is classifying an image you need to calculate the gradients to measure the relationship between changes to a feature and changes in the model's predictions. For comparing these two images of guns, the gradient shows which pixels have the strongest effect on the model's predicted class probabilities.

The first step in analyzing how the software sees an image is using filtered gradients from all black to the normal image to understand which features of the object (clusters of pixels) are being focused on.

The output from this analysis shows how transitioning from all black to clear features impacts the prediction probability of what the object is.

X-axis (alpha): Represents a parameter which scales the input from the baseline to the original input.

Y-axis (model p(target class)): Shows the predicted probability of the target class by the model.

Plot on the left is completely flat at 0 across all values of alpha. This indicates that the model's predicted probability for the target class remains 0 regardless of the value of alpha.

On the right, the plot shows how the average pixel gradients change as alpha varies from 0 to 1. The average pixel gradients start low, spike dramatically at a low value of alpha, then gradually decrease and stabilize as alpha increases. The spike in gradients suggests that there is a particular point where the input features have a significant impact on the model's output.

The pink dots (attribution mask) show that this computer vision is classifying the object as a handgun based on pixels along the handgrip, trigger guard, and bottom of the barrel. When those are the primary features the software is looking for, a gun that is held sideways with these three features hidden probably won’t be classified correctly.

You can see exactly how gun detection software works in a demo posted on LinkedIn. When a handgun is held still against a contrasting background, the predictive algorithm assigns a 99.7% probability of a gun. When part of the weapon is obscured, the probability drops to 87%.

I wanted to try the same set of tests with the toy gel blaster gun that looks like an AR-15.

Unlike the all black handgun that has straight lines and a uniform shape, the right plot shows the model's sensitivity to input features changes across the whole plot.

These fluctuations in the gradients suggest that there are points where the input features have varying impacts on the model's output, even though this does not translate into a higher predicted probability for the target class. With this much variation in the shape and colors, the software is generally confused where to look.

Unlike the handgun, the classification model is “looking” all over the place at this image to try to figure out what it is. This shows how many features and variations of a weapon or an object that looks like a weapon could impact accurate classification.

Limited by the CCTV quality

Last week the St. Louis Police Department released CCTV footage from the CVPA High school shooting in October 2022.

Here is an image from the CCTV system of the 19-year-old shooter holding an AR-15 rifle as he walks down the hallway. From just looking at the camera, there is no way to tell that he is holding a full size military-style rifle.

After he walks around the school on camera for 15 minutes, there is finally a clear view of the rifle in an upstairs hallway.

Even this image is difficult for gun classification software because the wide-angle camera distorts the length of the weapon. For more information about the CCTV and body camera footage from the CVPA school shooting, here is my article about it.

Generative Pre-trained Transformers (GPT) vs. Standalone Image Classification

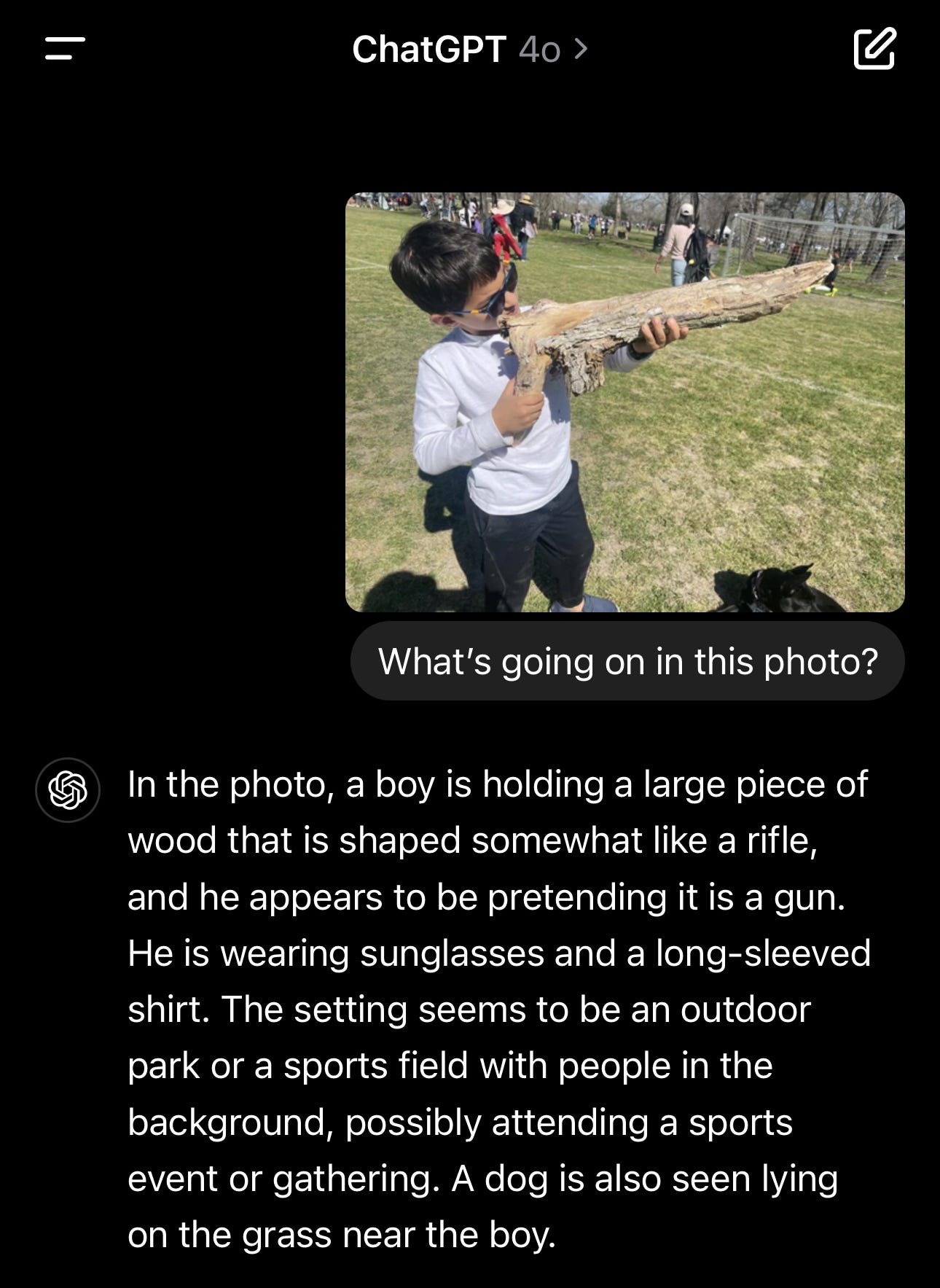

Unlike standalone image classification, an omni-model like OpenAI’s ChatGPT4o was trained with 13 trillion (TRILLION!!!) tokens of text, audio, and video. For comparison, a labeled machine learning model is trained on thousands of images because it takes time and labor for humans to label each one. This allows ChatGPT to analyze the entire context of a scene unlike the image classification systems that are looking at specific characteristics of one target object class.

If you missed it, here is my full article: Did OpenAI create the best weapon detection software available with ChatGPT-4o?

Reinforcement Learning

Designing software that is more consistently accurate at detecting guns may require developers to move beyond the current approaches of supervised learning (training software on labeled images of guns so that the program knows the “right” answers) and unsupervised deep learning (ex: Google or Apple’s photo categorization).

Reinforcement learning is a technique where a computer agent learns to perform a task through repeated trial and error interactions with a dynamic environment. This learning approach enables the agent to make a series of decisions that maximize a reward metric for the task without human intervention and without being explicitly programmed to achieve the task.

Based on the dynamic nature of visual or sensor environments, using reinforcement learning may be an even better approach than supervised machine learning. Schools are unique environments because images from security cameras are protected student records under federal law (20 U.S.C. 1232g(a)(4)(A); 34 CFR § 99.3 “Education Record”) and a school’s CCTV images cannot be used by a third party vendor without written consent of every student on video. This means that an AI security vendor using school security cameras can’t use the data from the school for training an AI model.

Conclusion

Image classification software can appear to have very impressive capabilities during a product demo but it’s important to also recognize the limitations. When it comes to distinguishing between different types of guns, toys, and gun-shaped objects that look similar, the software can’t really distinguish the differences.

From a published paper, we know that best in class technology is performing around 95% accuracy. With commercial models, there is usually a black box which makes the model’s decisions, accuracy, and rate of error hard to understand. This lack of transparency is a problem for school officials who will use these systems to decide if a campus needs to go on lockdown causing a high-risk police response as officers run through the buildings with guns drawn.

My analysis also highlights the challenge with using a CCTV image to classify a firearm because the angle, lighting, and which parts of the gun are visible drastically changes the accuracy. Using systems other than cameras (e.g., 3D mapping of sensor data) may be able to generate richer data with higher accuracy than a 2D digital image.

This article may have been a bit on the nerdy and technical side, but I hope it helps school officials understand the fundamental ways that AI software is working. Let me know if you found this useful!

David Riedman is the creator of the K-12 School Shooting Database, Chief Data Officer at a global risk management firm, and a tenure-track professor at Idaho State. Listen to my recent interviews on Freakonomics Radio, the weekly Back to School Shootings podcast, and my article on CNN about AI and school security.