Did OpenAI create the best weapon detection software available with ChatGPT-4o?

A new function added in ChatGPT-4o is the ability to upload and analyze images. I tested some pictures of guns and gun-like objects to see how it performed.

More than a dozen companies market software that identifies weapons visible on CCTV at schools, businesses, and public buildings. The concept is pretty simple, AI software can spot a weapon faster than a human and immediately alert police. The problem is these models can’t reliably tell the difference between weapons and weapon-shaped objects (e.g., umbrellas, water guns, sticks, cellphones, mops, or basically anything that has a straight line like a gun barrel).

As I wrote about in March (How do AI security products being sold to schools really work?), an image classification model is only looking for a specific object and doesn’t understand anything about the context of the whole scene.

AI weapon classification software doesn’t see a kid playing with a water gun because it doesn’t know what a kid or playing is. The classification model only sees an object that has a high probability of matching the same characteristics of a gun in the training dataset.

To get around this problem, some companies have human reviewers checking and rejecting images which adds time, bias, and error that undermines the advantages of having AI instantly identify a threat.

ChatGPT has entered the chat

Three weeks ago on May 13, 2024, OpenAI introduced GPT-4o ("o" for "omni") the newest generative AI model with the ability to create outputs across text, audio, and images in real time. Unlike the free version, the paid version of GPT-4o has response times comparable to human reaction.

Based on the test images that I ran through ChatGPT-4o, OpenAI has more advanced weapon detection capabilities from analyzing images than anything available on the commercial market right now.

I’ve seen demos from multiple AI image classification companies and the ability of ChatGPT-4o to correctly classify weapons versus weapon-shaped objects using the context of the entire scene is better than anything that is being used by schools right now.

My test of ChatGPT

I searched online for images of people with guns on CCTV cameras, kids with toy guns, and kids with gun-shaped objects like sticks.

I tested sixteen different images (I could do hundreds more, but this would be a very long article so leave a comment of you want to see more tests):

For each image I gave ChatGPT-4o the simple prompt “what’s going on in this photo”.

Inference time (processing from prompt to output) was about 1-3 seconds on the mobile app.

After each photo, I closed the session and opened a new thread so the context of the first interaction wouldn’t influence the next photo.

ChatGPT-4o correctly identified guns versus gun-like objects in all 16 of the photos and understood the context of each scene. I’m absolutely stunned by how well this model performs.

Guns on security cameras

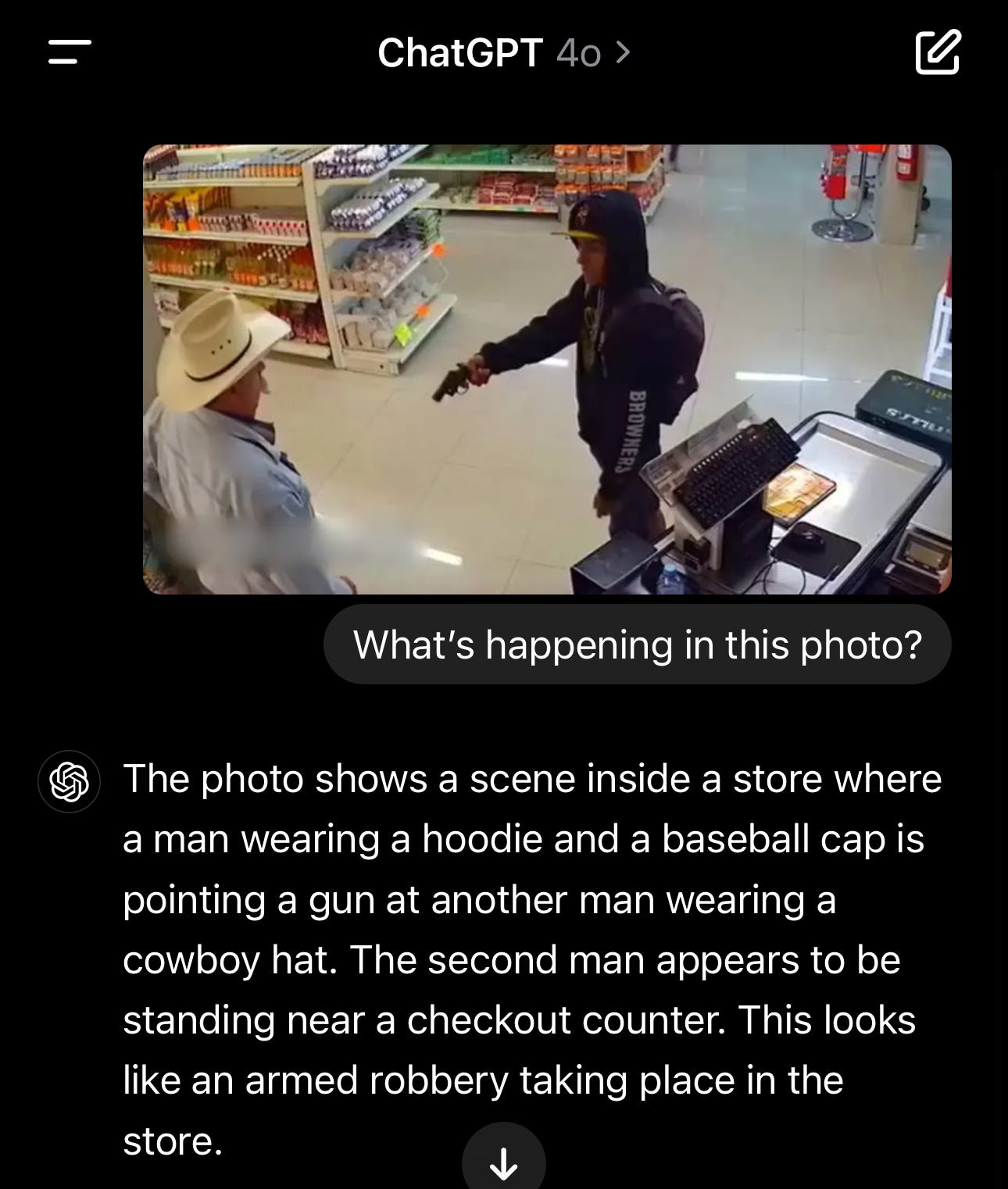

Robbery at a grocery store: Straightforward CCTV image with a gun in the middle of the frame.

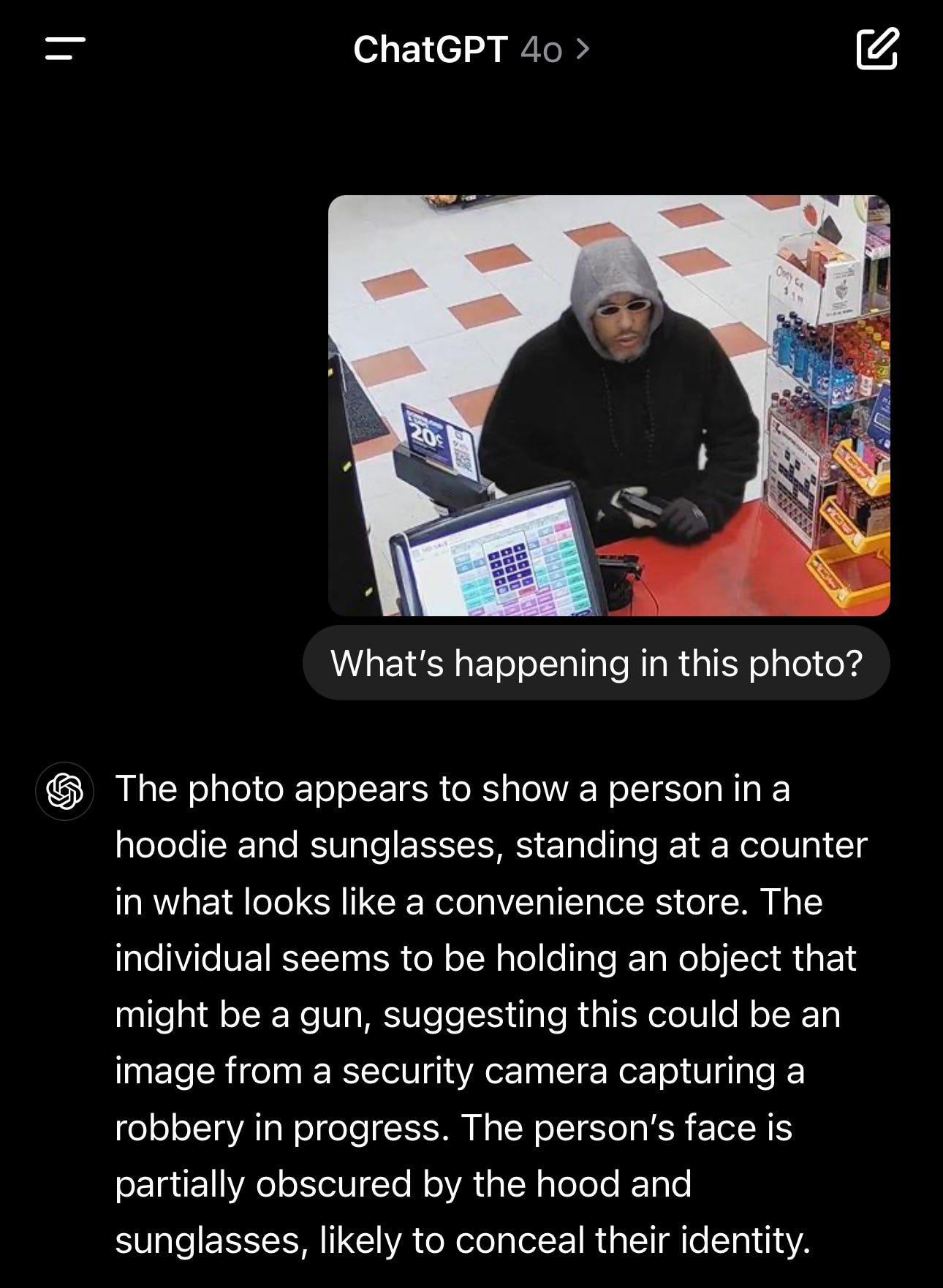

Another robbery: This image is stress testing ChatGPT because the gun is mostly obscured. For image classification software, this would be a very difficult gun to accurately categorize because very little of the distinctive shape is visible.

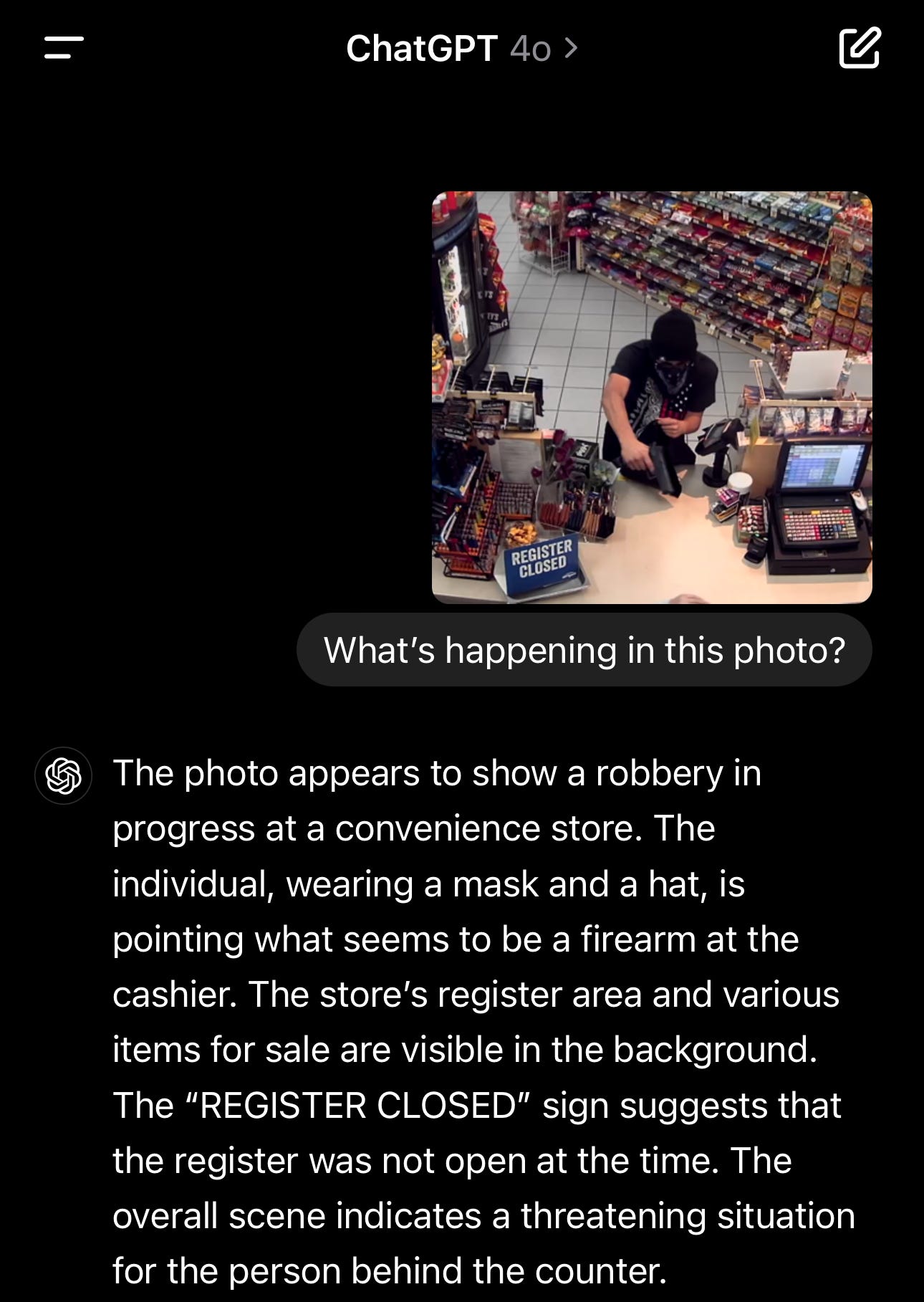

And another robbery: I picked this photo because there is a black handgun against a black shirt and black bag. Some weapon classification models have been trained with high contrast backgrounds to make the shape of the guns more distinct which means the training data doesn’t match real world conditions.

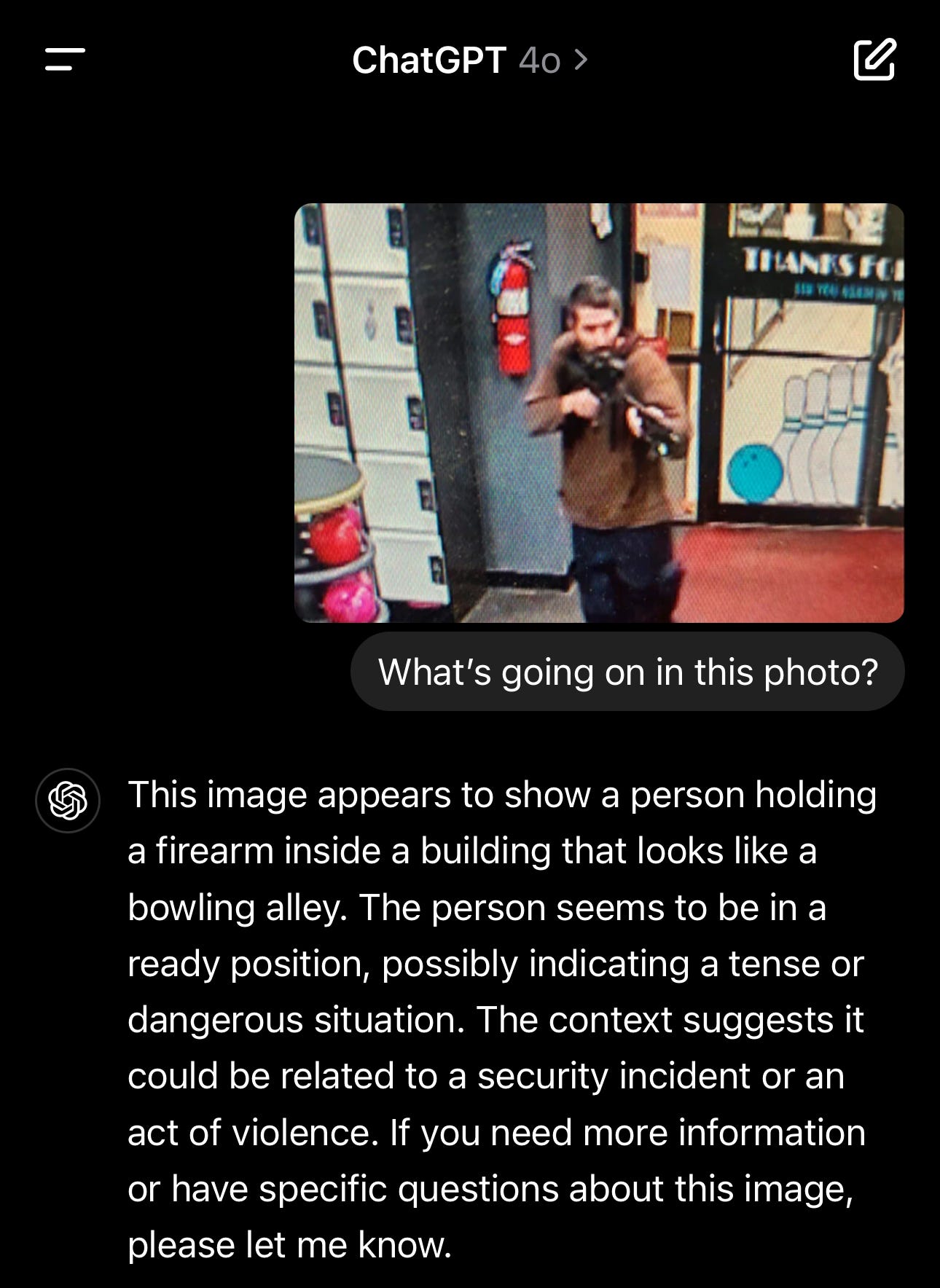

Lewiston, Maine mass shooting: I selected this image because half of the rifle is fairly obscured against the brown jacket.

Gun-like objects

Kid with an umbrella: Umbrellas are a nightmare for gun classification software because they are usually black, about the same length as a rifle, have a handle, and usually have a black tip like the end of a gun barrel.

Pointing an umbrella like a rifle: To really stress test the umbrella, I found a photo of someone holding one like it’s a rifle. If a weapon detection company has human reviewers who are rapidly clicking through images because alerts need to be sent within seconds, this would be one that could get easily confused with a real weapon depending on the context of the setting.

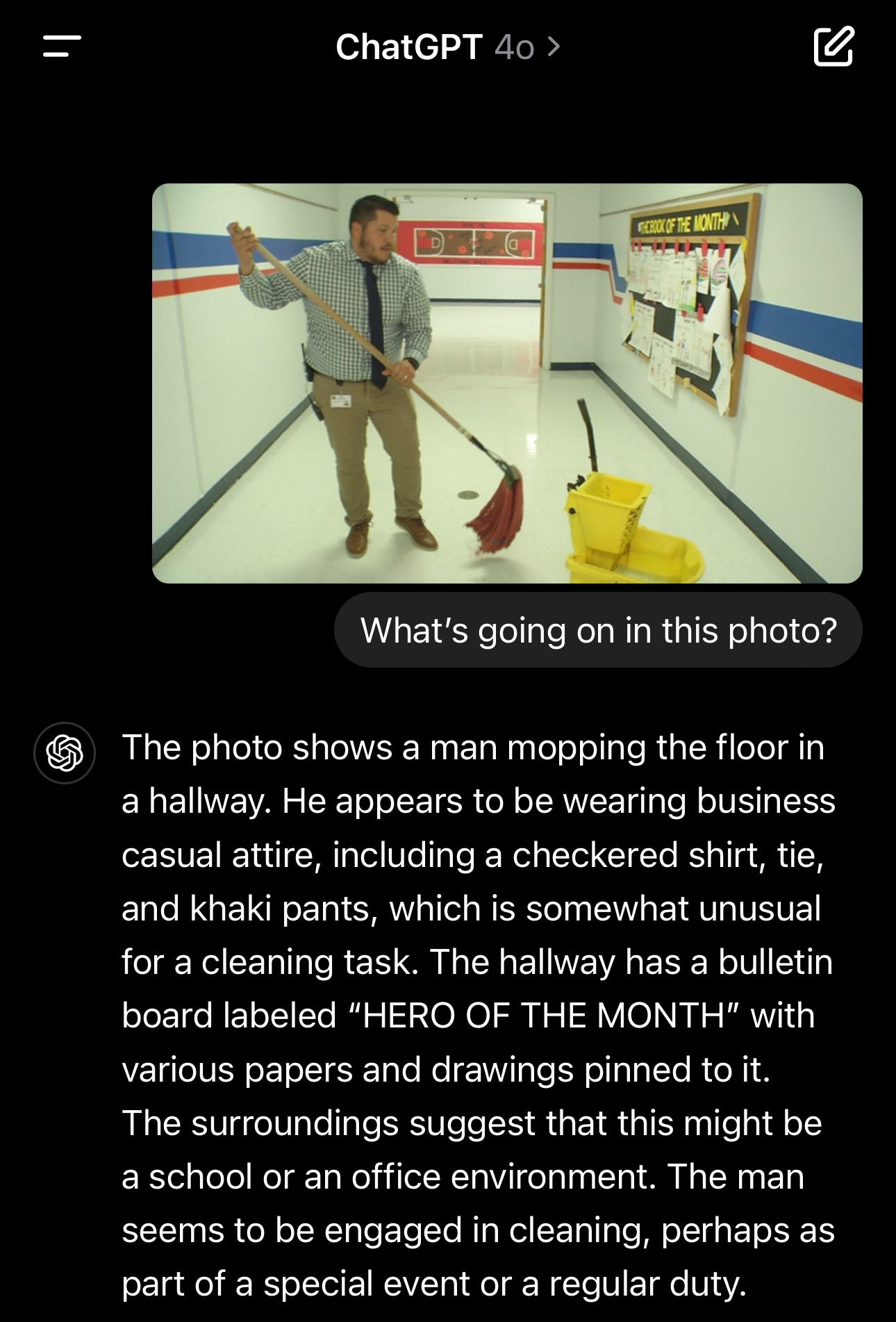

Person with a mop: From demos that I’ve seen of gun classification software, a long stick held across the body has a good chance of being flagged as a weapon because it has the same general shape and properties of a rifle barrel.

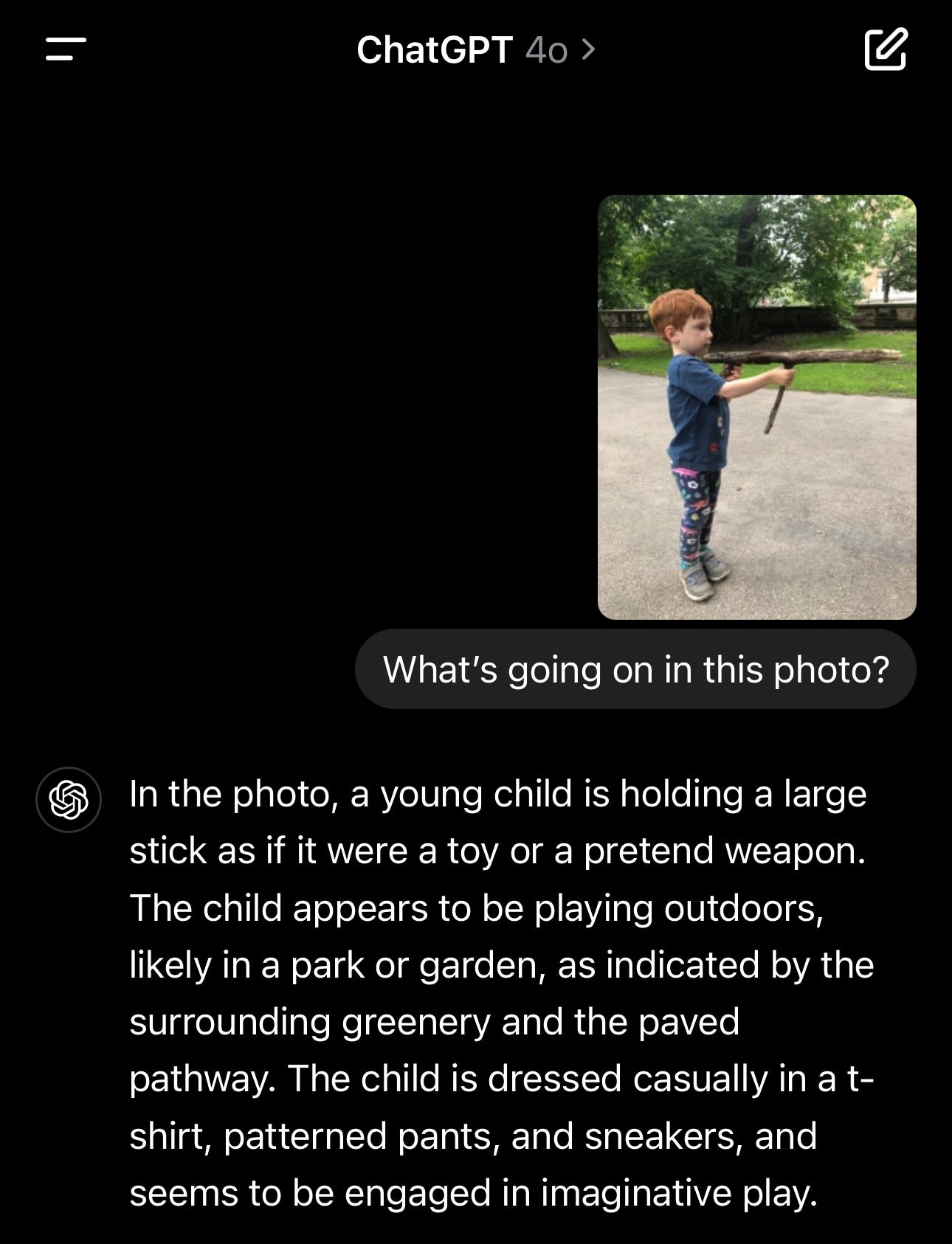

Kid holding a stick like a gun: This would be a very tricky object for a classification model because it is made of wood like a hunting rifle, has the same general dimensions of a gun (including the grip), and is being held like a gun.

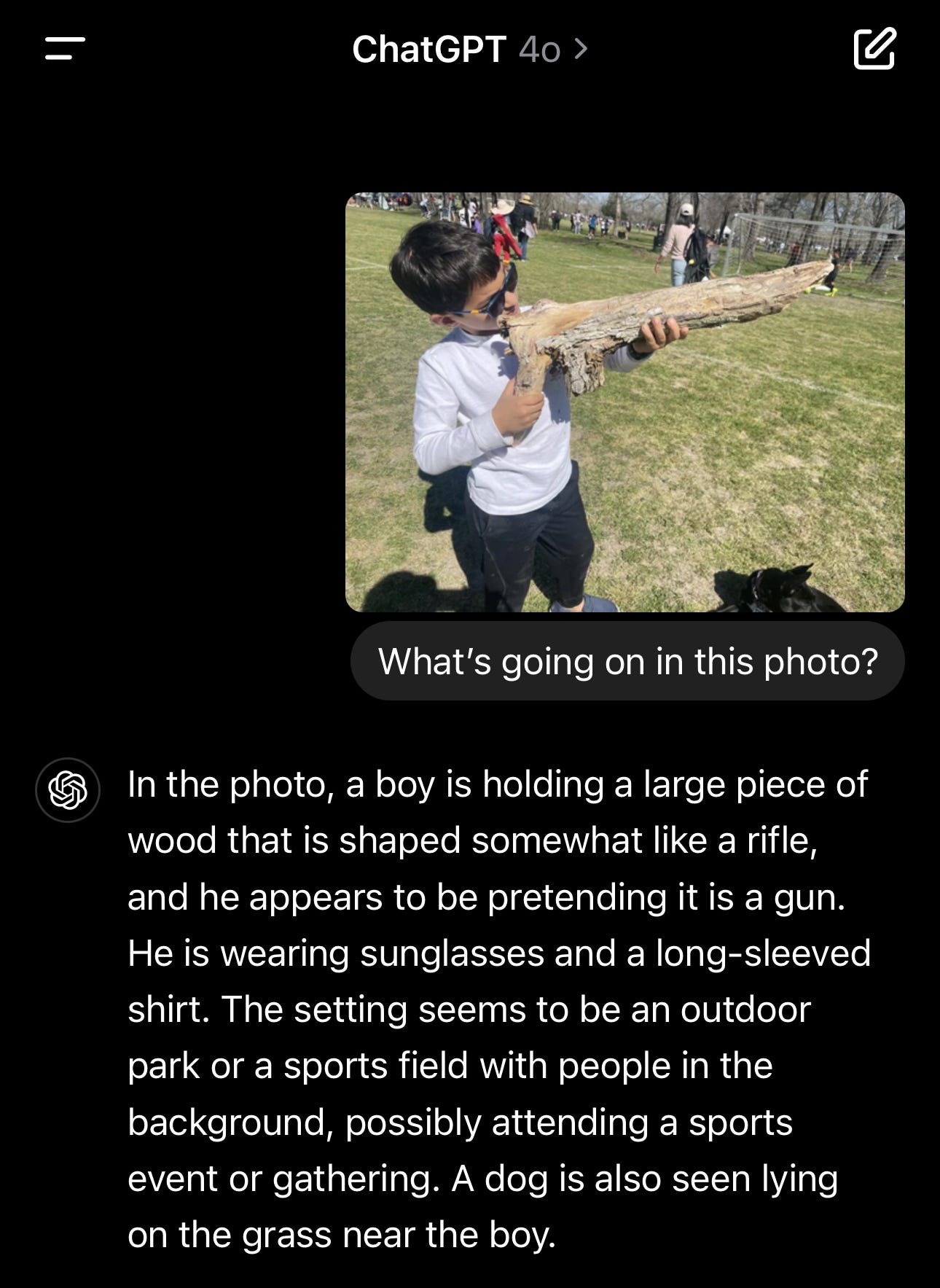

Another gun-shaped stick: This stick looks a whole lot like the shape of a gun including a broken branch sticking down like the magazine in a semi-auto rifle. If you compared the measurements along the angles of this stick, there would be a high overlap with the dimensions of a gun. To create a little extra confusion, the kid is wearing sunglasses and looking down the barrel.

Toy Guns

Kids playing with squirt guns: While both of the objects in the photo are guns, seeing the context of the situation shows that this is just kids having a good time.

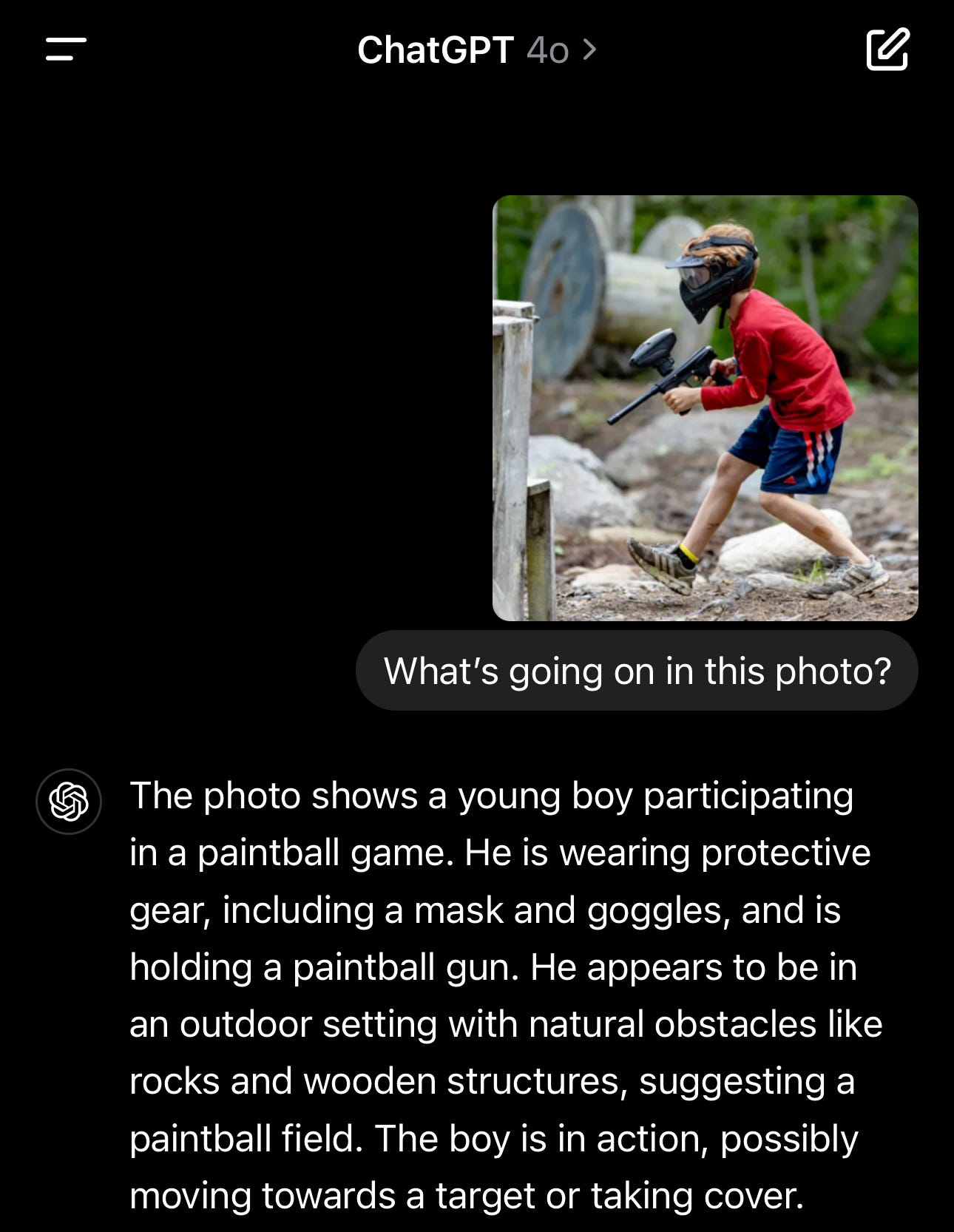

Black paintball gun: For standalone image classification software, a black paintball gun has the same characteristics as a real gun. It’s the context of the mask and goggles that explains this is a kid playing paintball.

BB guns and air rifles

[Note: I’m shocked by how good these results are.]

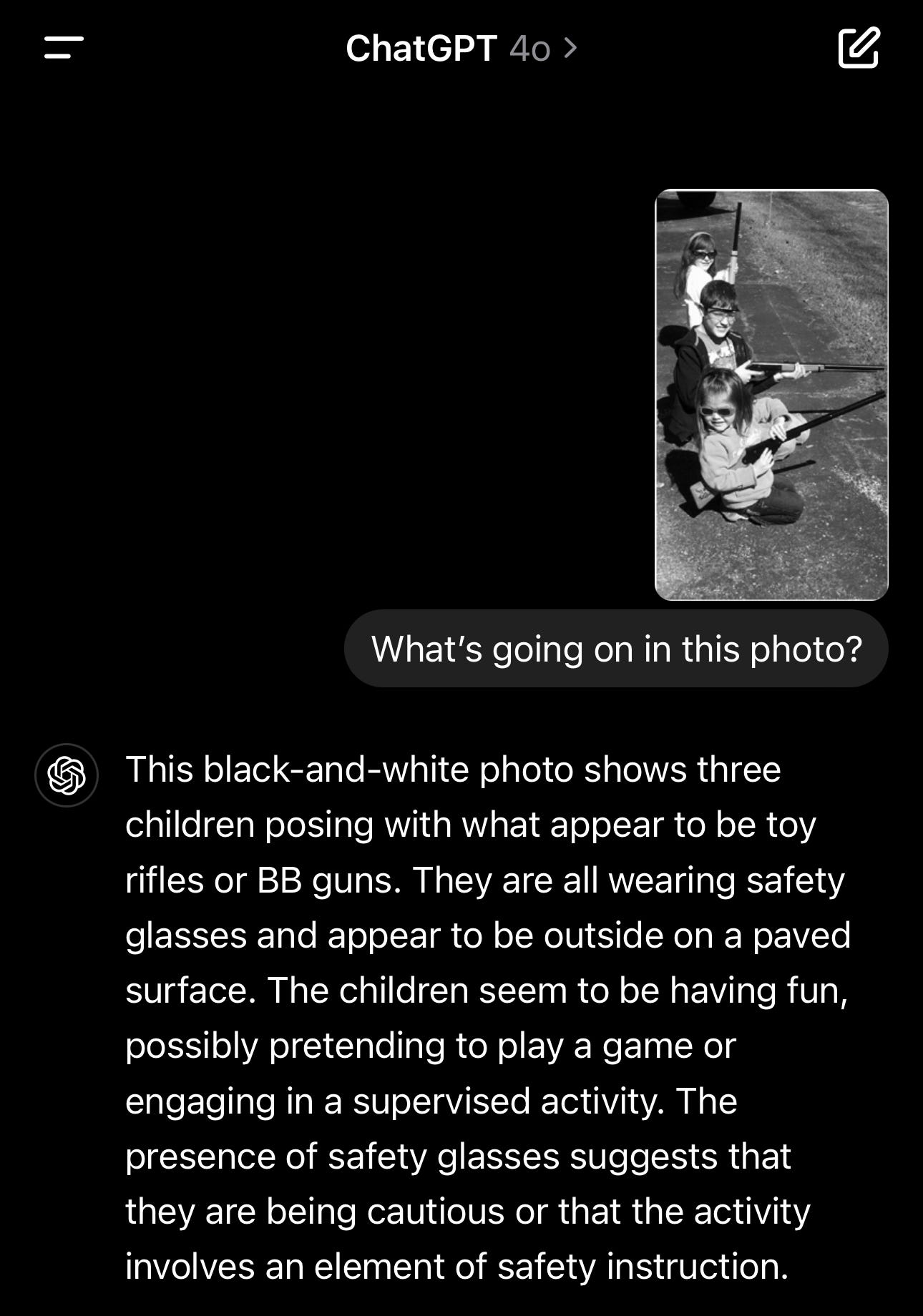

Three kids holding BB rifles: While this looks like kids with real guns and would be classified as a gun by a standalone system, the context from the faces shows that these are just kids playing.

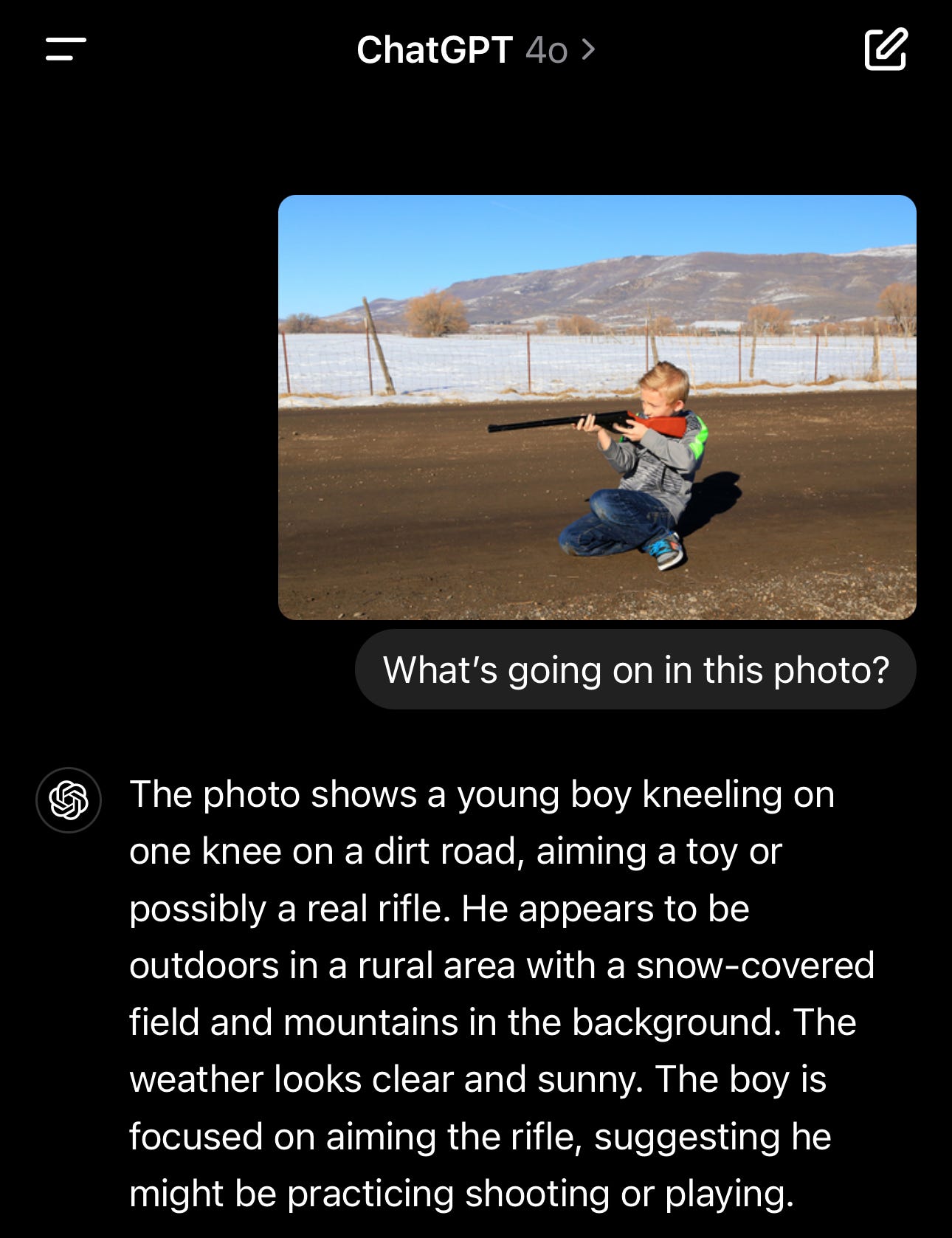

Another BB gun (or maybe real gun): In this image, ChatGPT doesn’t know if it’s a toy or real gun. This one is a tough judgement call and the explanation shows that.

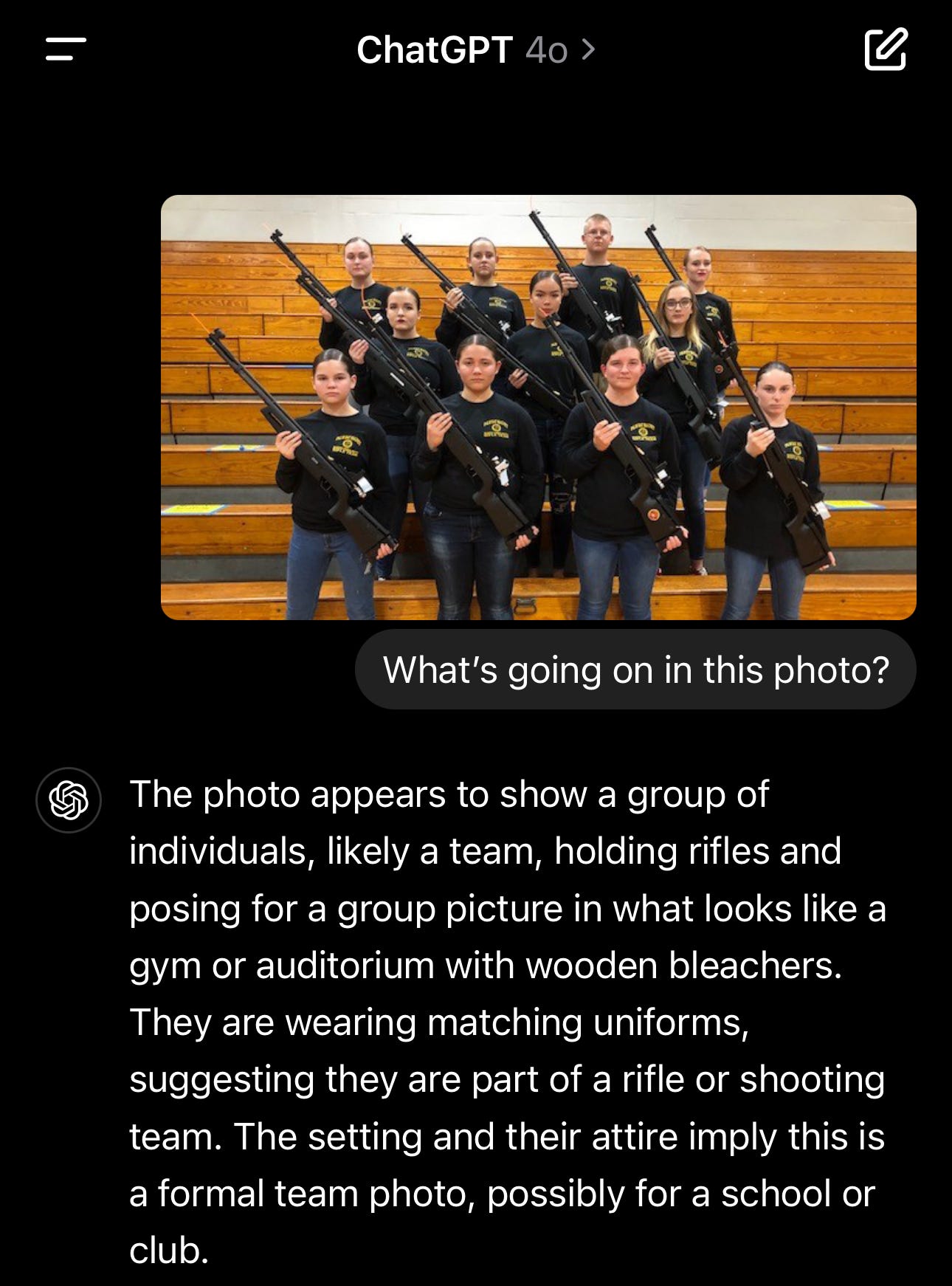

High school rifle team: A dozen kids are holding rifles inside the school gym. An object classification system should flag all 12 of these guns. The context that ChatGPT recognizes explains why this situation isn’t a threat.

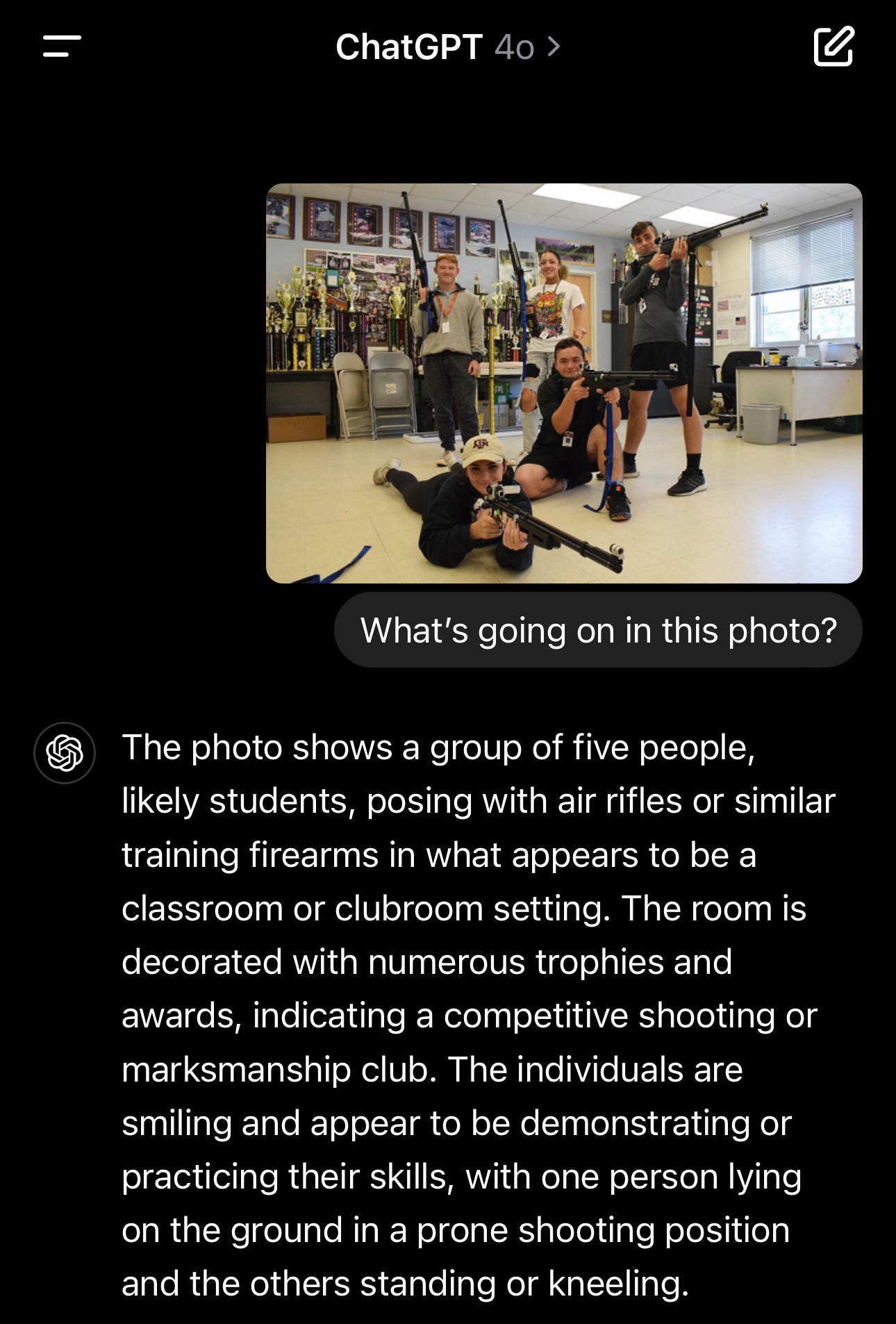

Another rifle team: I picked this photo as a stress test because the kids are inside a classroom, guns are being held in a shooting position, and the guns are being pointed in the general direction of the camera.

Not Guns

This image of a shadow caused an active shooter response to Brazoswood High School in Texas last October when an AI image classification system flagged a gun.

The same image in ChatGPT-4o was not identified as a gun. Unlike commercial systems being used at schools right now, ChatGPT is smart enough to know what it doesn't know. ChatGPT makes the correct assessment even with the red highlighting added on the shadow (that wouldn't be in the original image, this was the only version available online).

Unlike a human reviewer employed to audit images for specific objects (e.g., weapons), ChatGPT doesn't have a bias to see an unknown object as a gun. ChatGPT even suggests how to handle the situation in a reasonable way that doesn’t involve sending dozens of police officers racing to the school for an active shooter.

Implications for school security

Based on these images, I think ChatGPT-4o is the most advanced weapon detection technology ever created and this is just the start! There will be another more powerful and more functional ChatGPT version in the next few months that will do an even better job of analyzing still images and videos.

On the DataFramed podcast (a weekly data science show), they discussed the history of data and the future of AI. Advanced models like ChatGPT are going to make some companies and workers more productive while making others irrelevant.

For example, the power of AI has made transcription services irrelevant because a model can do it better, faster, and cheaper than a human. ChatGPT-4o is the start of the sunset on standalone image classification companies. Legacy weapon classification software might be trained on a couple million images. The new version of ChatGPT-4o has been trained on ~13,000,000,000,000 (trillion) which is why it understands the context of the situations so well. The next version is likely to have 3-4x more training data!

There is no reason for a school system to sign a multi-million dollar contract for software that has a fraction of the capabilities that OpenAI offers for $20/month. Within a matter of weeks or months, developers can build systems using OpenAI’s infrastructure to offer the best weapon detection software available for a fraction of the current costs.

David Riedman is the creator of the K-12 School Shooting Database. Listen to my new podcast Back to School Shootings, or catch-up on my prior interviews including Freakonomics Radio and New England Journal of Medicine.