Part 4: Ways to reduce noise when assessing school shooting threats

Groups of people make better predictions that individuals. Using the wisdom of crowds can reduce variability (noise) in decision-making.

Nobel prize-winning economist Daniel Kahneman died in March. I started the research for this paper when I read his book, Noise: A Flaw in Human Judgement, in 2021. His last long form interview was on Freakonomics Radio about Noise.

Noise is the unwanted variability in decisions made by experts who are looking at exactly the same information.

This series of Substack articles is based on a survey that I conducted after reading Kahneman’s book Noise in 2021. I cowrote a subsequent academic journal article on the survey results with Dr. Jillian Peterson, Dr. James Densley, and Gina Erickson from The Violence Project.

A solution for addressing noise is having multiple people make assessments and then using the average from the group. In his 2005 book The Wisdom of Crowds, New Yorker business columnist James Surowiecki explored a deceptively simple idea: Large groups of people are smarter than an elite few, no matter how brilliant. Groups are better at solving problems, fostering innovation, coming to wise decisions, and even predicting the future.

The most famous example of this theory is from the 1906 county fair in Plymouth, MA where 800 people participated in a contest to estimate the weight of an ox. Statistician Francis Galton observed that the median (average) value from the 800 guesses—1207 pounds—was accurate within 1% of the true weight.

A collection of individual judgments can be modeled as a normal probability distribution with the median (average) of all the guesses centered near the true value of the quantity.

Following this normal distribution theory, a few scores will be way off while the majority cluster near the group average. This is exactly what our data from the school shooting threats survey showed.

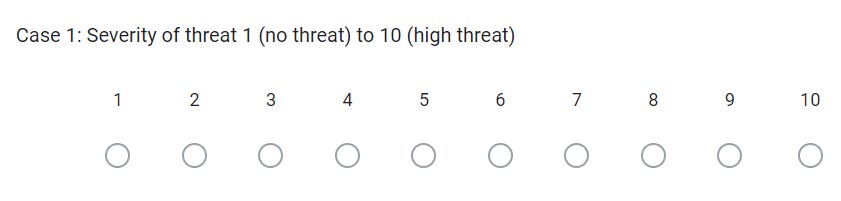

For five of the six scenarios, threat severity scores ranged from 1 to 10 with averages between 3.5 and 7.5. This means that some officers assessed a scenario as “10” (high threat) while the entire group of 245 officers collectively rated it as 3.5. Inversely, some officers rated a scenario as “1” (no threat) while the aggregate group score was 7.5 (moderately high threat). Most scored somewhere near the group average.

Quick recap of the study

While we expect police officers to consistently assess school shooting threats, our study found there is a significant amount of noise—or unwanted variability—in their decisions.

In August 2021, an electronic survey was sent by email to 600 law enforcement alumni of the Center for Homeland Defense and Security and all members in good standing of the National Association of School Resource Officers. Read more about the methods in Part 2.

The survey yielded responses from 245 law enforcement practitioners directly responsible for assessing threats. They rated on a Likert scale from 1 (no threat) to 10 (high threat), the severity of six fictitious vignettes constructed from common themes identified in real school shooting threats. Read more about the details of each fictional scenario and the responses in Part 3.

Recommendations

The psychological concept of “naive realism” describes the human tendency to believe we perceive the world as an objective reality instead of subjective constructions from our own lived experience. An experienced police officer is an expert who is trained to think that both their judgment is accurate and that other officers would also see the situation in the same way that they do. This is naive realism.

The findings from this study suggest similarly trained and qualified experts in school threat assessment, like police chiefs and school police officers, interpret identical school safety situations very differently. Kahneman argues naive realism is why such “noise” in human judgment and decision-making exists, but we cannot perceive it or understand it without statistical data to detect it.

This is the first study that demonstrates the existence of noise in the life-or-death context of school shooting threat assessment.

In practice, evaluating a school shooting threat often comes down to a yes or no decision. Either the threat is real and action must be taken, or the threat is baseless and classes can continue. Errors due to variability in personal decision making in this binary (yes or no) judgment can lead to disaster. If an expert perceives that the threat is too low, a shooting can occur. This happened at Oxford High School in Nov. 2021 with fatal consequences. If an expert perceives the threat as too high, schools will close unnecessarily without a real risk of violence. This also happened after the Oxford school shooting when hundreds of online hoaxes closed schools across the country.

While there was a huge amount of variability in the individual scoring of the severity of each case, the median value (line in the middle of the box) of all 245 officer’s ratings provides a pretty good assessment of each situation.

The patterns in the differences and how far answers deviate from the average are evidence of error but not necessarily individual bias. Noise only exists in aggregate and no single case explains it. Noise can only be analyzed with data to determine patterns in the extraneous factors that influence aggregate judgements.

For example, the results of this study showed that prior experience responding to a school shooting was associated with a pattern of higher rated responses when assessing threats. That experience does not necessarily mean each individual with it rated threats higher, but the aggregate of that experience had statistically significant differences.

Across all six of the school shooting threat vignettes, there was a consistent pattern of system noise. The consequence of this noise is that one expert might close a school and arrest a student while another would take no action.

Analyses show that participants tended to be consistent in their high or low ratings across scenarios, indicating their personal background and characteristics were related to their interpretation as opposed to the details of the threat scenario itself.

In the end, there are many possible reasons why law enforcement practitioners could disagree on intended actions, and they may reflect different beliefs and values that are totally appropriate and normative with respect to making such decisions.

Likewise, the law enforcement practitioner must consider the relative costs of the two types of error in the decisions:

Not take action to avoid disruptions when the threat is real.

Take actions that disrupt the school for a hoax.

The choice to err in either direction may reflect the values of each community and school, whereby some schools may be more tolerant to cancelling class for an “abundance of caution” while other schools want to avoid disruptions.

The findings from this survey highlight a dire need for national guidelines, standardized assessment tools, and training for school officials, mental health providers, and law enforcement practitioners. The variability in a yes/no decision that decides the safety of children inside a school can be fatal. When experts consistently rate high or low, this aligns with a “stable noise pattern” where subjects weigh identical criteria in a case differently from other experts due to differences like personality or prior experience. This shows a high level of bias influencing decisions that should be objective.

Using the wisdom of crowds

Kahneman’s recommendation is that decisions like this should be averaged across groups of experts. The principle known as “The Wisdom of Crowds” has shown in multiple different contexts and situations that aggregated judgments produce better results. If more informed judgments are made, the aggregate of them will be closest to the right answer and eliminate noise because more responses normalize randomly high or low judgements.

Basing a decision on an individual judgment brings the risk that it is the high or low outlier.

Many schools use multi-disciplinary threat assessment teams to analyze shooting threats, but the findings here suggest that interpretation of the severity of a given threat may be better conducted individually, with the responses then aggregated. Making decisions in a group setting where discussion leads to a consensus can have the same biases as individual decisions. Having everyone assess the situation separately and then using the average value of the results can remove this bias.

If there is not a formal threat assessment team, the tragedy at Oxford High School and other school shootings like it, show why a single person should not make a critical decision on a threat because that person has been the low outlier in real life. In small jurisdictions without the resources for a multidisciplinary team, creating a system where outside experts can assess and evaluate threats could help aggregate multiple decisions to balance any outliers. In any sized jurisdiction, implementing a system that elicited as many informed opinions as possible should yield more consistent results.

Before new training, policies, or procedures are put in place, a noise audit should be conducted to see how much noise exists within the current process for assessing threats. Noise can only be identified by analyzing a collection of decisions.

After conducting a training session, forming a threat assessment team, or publishing new guidance, a follow-up noise audit can show if the changes have decreased noise. It is important to understand that consistency does not equal being correct. It only takes one person to make the right judgment call and stop a school shooting—the mean or average could be wrong. This has important implications for policy and practice including the need for systematic data collection to create the tools that can help us understand the noise we should listen to and why.

Using an algorithm instead of human judgement

Finally, with better data on the nature and extent of school shooting threats and their outcomes, there is the potential to use artificial intelligence and big data tools to develop algorithms for assessing threats rather than leaving it to one or more people to make individual, subjective decisions.

When an algorithm is wrong, it is more likely to consistently make explainable errors that can be improved by modifying the evaluation criteria. Unlike a computer program, human judgment creates variable errors for less explainable reasons that are not easily changed.

Even if a threat assessment algorithm does not have the final say in addressing a threat, an objective computer assessment could help normalize the decision of a human assessor much like the computer programs already used across the criminal justice system to extend traditional “risk factor” assessment tools. These tools are already being used to help judges decide if bail or parole should be granted.

Most Important Finding

Individual police officers made wildly inconsistent assessments when deciding the severity of school shooting threats. This has real world consequences because missing a threat can be deadly while overreacting unnecessarily shuts down a school.

Having multiple officers evaluate a threat individually and then using an aggregate of their scores yields more consistent results. This process has the potential to be augmented by artificial intelligence to further standardize assessment criteria.

Our most important finding is that no single person should be tasked with assessing the severity of a threat on their own.

David Riedman is the creator of the K-12 School Shooting Database and an internationally recognized expert. Listen to my recent interviews on Freakonomics Radio, New England Journal of Medicine, and Wisconsin Public Radio after the Mount Horeb Middle school shooting.